Do you specify requests & limits for your Kubernetes pods?

Here is why it’s very important.

When deploying a pod, Kubernetes assigns a QoS class to pods based on the requests and limit parameters.

- Requests: The minimum resources a pod needs to run.

- Limits: The maximum resources a pod is allowed to use.

resources:

requests:

memory: "256Mi"

cpu: "250m"

limits:

memory: "512Mi"

cpu: "500m"In this example:

- The

requestsfor CPU is set to250 millicores(1/4 of a CPU core) and for memory at256MiB. - The

limitsare set to500 millicores(1/2 of a CPU core) and512MiB of memory. - This means the pod needs at least a quarter of a CPU core and 256 MiB of memory to run, but it won't use more than half a CPU core and 512 MiB of memory.

What is Pod Quality of Service? (QoS)

Kubernetes pod scheduling is based on the request value to ensure the node has the resources to run the pod.

However, a node can be overcommitted if pods try to utilize all its limit ranges more than the node’s capacity.

When pods on the node try to utilize resources that are not available on the node, kubernetes uses the QoS class to determine which pod to kill first.

Pod QoS Classes

There are three Pod QoS.

- Best Effort

- Burstable

- Guranteed

Lets take a look at each one with practical examples.

1.Best effort

Your pod gets the best-effort class if you do not specify any CPU/Memory requests and limits. They use whatever resource is available. Best-effort pods are low-priority pods. The best-effort pods get killed first if the node runs out of resources.

apiVersion: v1

kind: Pod

metadata:

name: nginx-besteffort

spec:

containers:

- name: nginx-container

image: nginx

In this configuration:

- There are no resource requests or limits set for the Nginx pod.

- The pod will use whatever resources are available, but there's no guarantee.

- It's the first to be affected when the cluster runs low on resources.

2.Burstable

If you set the request lower than the limit, the pod gets a burstable class. If the node runs out of resources, they're a bit more likely to be stopped than Guaranteed pods, but less likely than BestEffort pods.

Here is a practical example.

apiVersion: v1

kind: Pod

metadata:

name: nginx-burstable

spec:

containers:

- name: nginx-container

image: nginx

resources:

requests:

memory: "500Mi"

cpu: "500m"

limits:

memory: "2Gi"

cpu: "2"

In this configuration:

- Nginx is guaranteed a minimum of 0.5 CPU and 500Mi of memory but can use up to 2 CPUs and 2Gi of memory if available.

- This pod will receive more resources than a BestEffort pod but is less prioritized than a Guaranteed QoS pod.

3.Guaranteed

The pod gets a guaranteed class if the request and limit values are the same. It is considered the highest priority pod and gets killed if there are no best-effort or burstable pods.

Here is a practical example.

apiVersion: v1

kind: Pod

metadata:

name: nginx-guaranteed

spec:

containers:

- name: nginx-container

image: nginx

resources:

requests:

memory: "1Gi"

cpu: "1"

limits:

memory: "1Gi"

cpu: "1"

In this configuration:

- Both the memory and CPU requests and limits are the same.

- The pod is guaranteed to have 1 CPU and 1Gi of memory.

- Kubernetes will treat this pod with high priority.

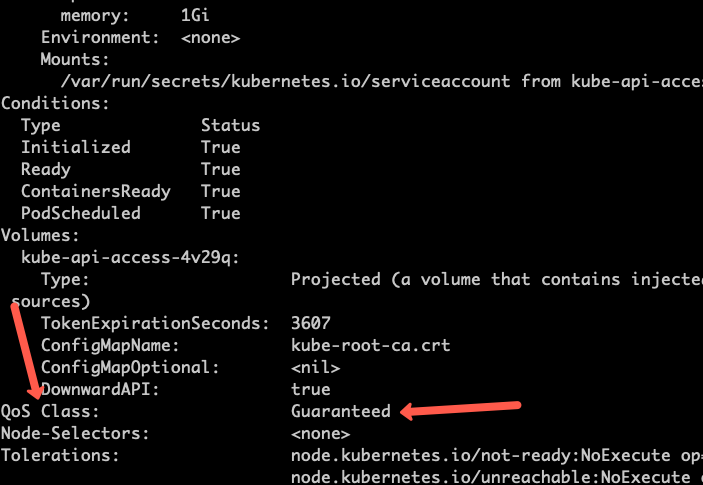

Checking QoS of Existing Pods

To check the Quality of Service (QoS) class of a pod in Kubernetes, you can describe the pod.

kubectl describe pod nginx-besteffort

This will provide detailed information about the pod, including its QoS class as shown below.

Pod QoS Best Practices

Here are some best practices for managing Pod Quality of Service (QoS) in Kubernetes:

- Understand Your Application's Needs: Know how much CPU and memory your application typically uses and what it needs to perform well. This understanding will help you set appropriate requests and limits.

- Use Requests and Limits Wisely: Set requests to the minimum your pod needs to run. This ensures your pod gets scheduled on a node with enough resources. Set limits to the maximum you're comfortable with your pod using. This prevents it from using excessive resources and affecting other pods.

- Prefer Guaranteed QoS When Possible: If you can, set the requests and limits to the same value. This gives your pod a Guaranteed QoS, ensuring it has a higher priority and more consistent performance.

- Be Cautious with BestEffort Pods: BestEffort pods can be stopped first when resources are low. Use this QoS class for non-critical tasks or those that can tolerate interruptions.

- Monitor and Adjust: Regularly monitor your pod's performance. If you see that your settings are too high or too low, adjust them. Kubernetes environments can change, and what works today might not be optimal tomorrow.

- Utilize Resource Quotas: In a multi-tenant environment, use resource quotas to manage how much resources each namespace can use. This prevents any single team or project from using more than their fair share.

- Consider Node Types: If your cluster has nodes with different resource capacities, remember that this can affect pod scheduling and performance. Adjust your requests and limits based on the type of nodes you have.

- Educate Your Team: Make sure everyone involved in deploying applications understands the importance of setting resource requests and limits. Good practices should be part of your team's culture.

- Document and Standardize: Document your QoS policies and best practices. Standardize these across your deployments to maintain consistency and predictability in your cluster's behavior.

Tools to Optimize Pod QoS

There are several tools available to help you manage and optimize Pod Quality of Service (QoS) in Kubernetes:

- Prometheus: A powerful monitoring tool that integrates well with Kubernetes. It can help you collect, store, and query metrics to understand resource usage.

- Kubernetes Resource Recommender: KRR (Kubernetes Resource Recommender) is a CLI tool for optimizing resource allocation in Kubernetes clusters. It gathers pod usage data from Prometheus and recommends requests and limits for CPU and memory.

- kubectl top: This command-line tool shows the resource usage for nodes or pods. It’s useful for quick checks and spot checks.

- Kubecost: A tool that provides cost monitoring and management for Kubernetes, helping you understand and optimize your resource allocation.