In this blog we will look at,

- Need for Terraform State Locking

- State Locking With DynamoDB

- State Locking with S3 without DynamoDB

- When to use DynamoDB and when to use s3 state locking.

Before we get into the details, let's understand some basics.

So what is state lock?

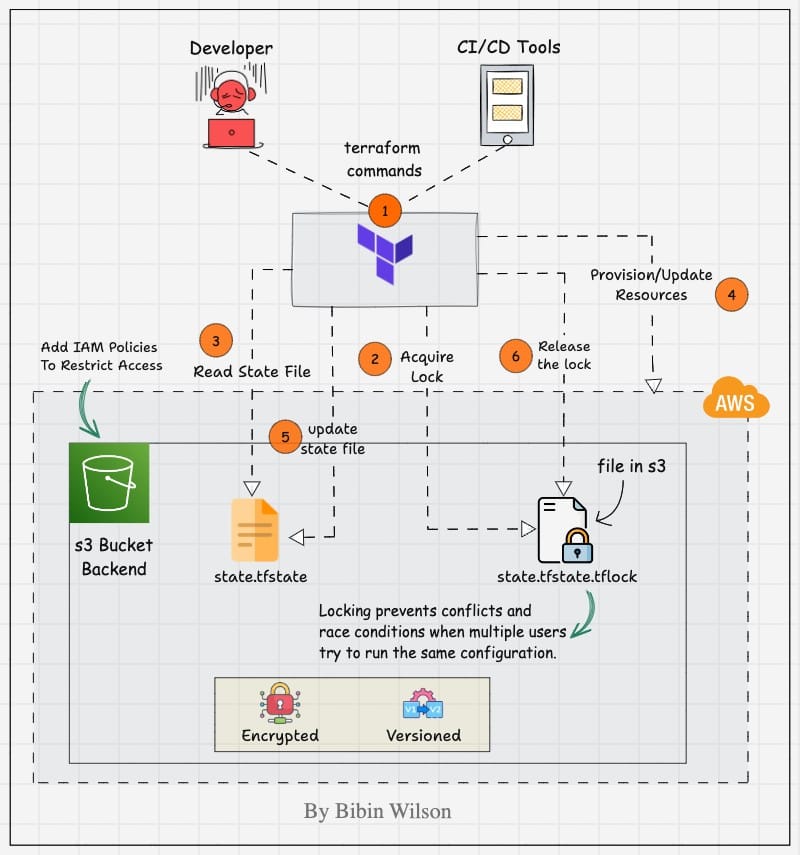

When we look at real-world Terraform implementations, most Terraform infrastructure deployments happen through CI/CD systems.

This means that, at any given point in time, the same Terraform state could be accessed by different CI/CD jobs. Also, multiple developers could access the same state file during the development process.

If multiple terraform process uses the same state file, it could lead to conflicts and inconsistencies in the state file. (race conditions).

So we need state locking to ensure one terraform process modifies the resource at a time.

It is like putting a "do not disturb" sign on your state file.

State Locking With DynamoDB

In AWS environment, the standard approach is using DynamoDB for state locking.

Here is how DynamoDB state locking works.

- When Terraform wants to modify a resource, it acquires a lock in DynamoDB by creating an entry in DynamoDB table with a specific lock ID (e.g., “lock-abc123”).

- If the lock is successful, terraform gets access to the state file from s3

- Once all the resource modifications are done, Terraform updates the state file and releases the DynamoDB lock.

For example, when developer X executes the terraform code, DynamoDB will lock the state, and developer Y should wait until the execution is completed.

Also, DynamoDB has a timeout period to prevent permanent lock-outs. This is helpful in cases where a lock is acquired by terraform, and it holds the lock due to abnormal process termination.

The following animated image shows the Terraform s3 backend workflow with DynamoDB locking feature.

State Locking With s3 Lockfile ( Experimental Feature)

With S3 now supporting conditional writes, it can handle concurrency and state locking.

Conditional writes allow S3 to prevent overwrites unless certain conditions are met. This ensures stronger consistency when multiple processes attempt to update the same S3 object.

For example:

- You can update an object only if it has not changed since the last read.

- This prevents race conditions where two Terraform processes might overwrite the same state file.

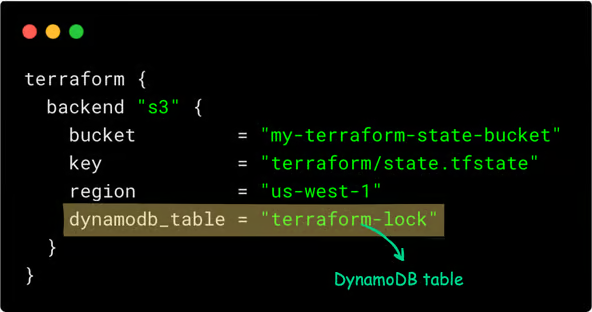

The Terraform backend configuration that uses DynamoDB for state looks like this:

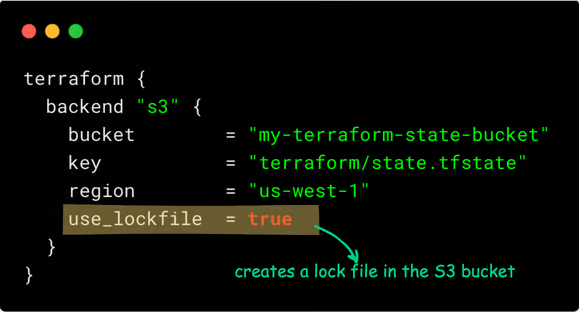

You can replace DynamoDB with an s3 lock using use_lockfile a flag as shown below. (introduced as experimental in Terraform 1.10)

Here is how it works.

Terraform creates a .tflock file in S3 before modifying the state to prevent conflicts.

It checks for an existing lock and waits or fails if another process is running. S3’s conditional writes enforce locking.

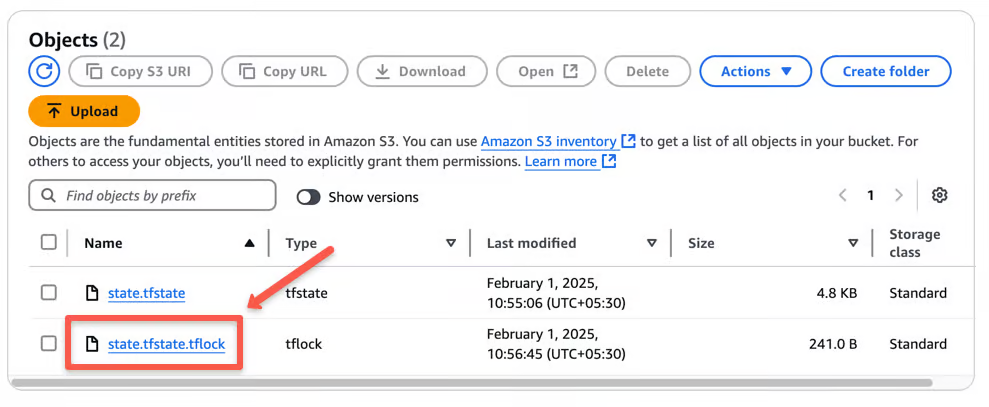

When you apply the Terraform script, you can see a .tflock file in S3, as shown below.

Once done, Terraform deletes the lock file.

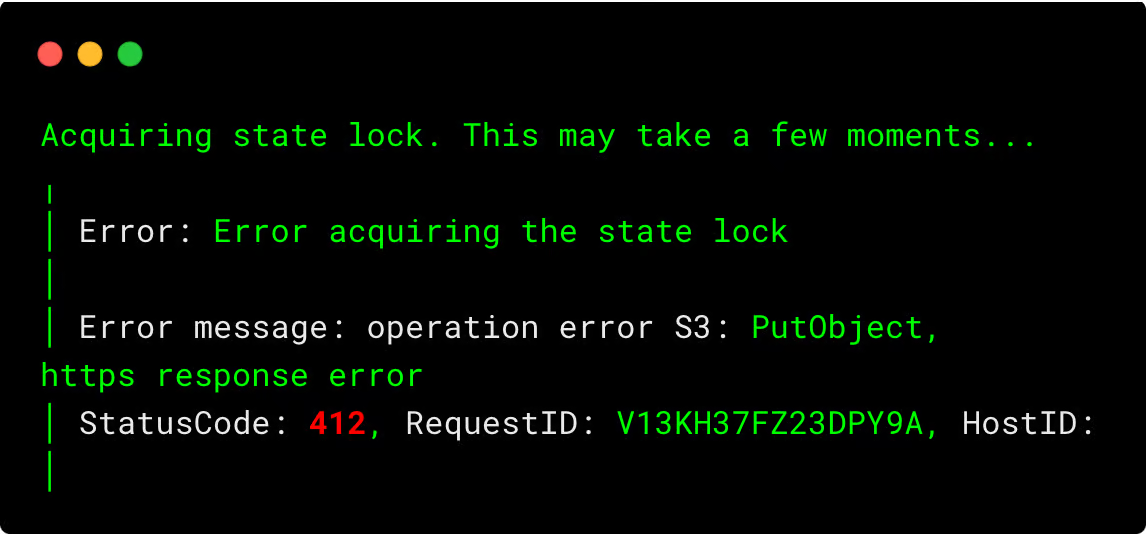

If another user tries to apply, they will get an error similar as shown below.

Conclusion

Replacing DynamoDB with use_lockfile simplifies your Terraform setup but comes with some trade-offs. Also, it is an experimental feature.

So when should you consider using use_lockfile?

- If you want a simpler setup without additional AWS resources.

- If cost is a concern and you don’t want to maintain a DynamoDB table.

- If your Terraform runs are infrequent and don’t need high concurrency control. Because S3 locking is eventually consistent, it could theoretically lead to race conditions in high-concurrency scenarios.

When to Stick with DynamoDB

- If you have multiple teams working on the same Terraform state file.

- If you need stronger locking guarantees with high-frequency Terraform operations. Because DynamoDB provides stronger consistency guarantees and better handling of edge cases (like process crashes).

- Also, DynamoDB locking is more battle-tested, while S3 native locking is simpler but newer.

Over to you.

Have you implemented state locking in your projects?

How was your experience setting up the workflow?

Share your thoughts in the comments below!