1.7 - Performance Measurements

Let's evaluate the performance metrics of training and testing dataset.

# compare the performance of our test-data with new predicted values

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

accuracy = accuracy_score(y_test, y_pred_lr)

confusion_mat = confusion_matrix(y_test, y_pred_lr)

classification = classification_report(y_test, y_pred_lr)

print('Accuracy: ', accuracy*100)

print(classification)

print(confusion_mat)

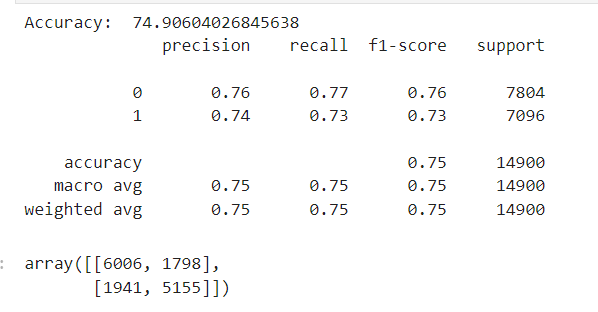

Accuracy determines that whether the model correctly predicts the target variable (here it is attrition). We got 75% appx accuracy.

Precision indicates the percentage of correctly predicted positives out of the total predicted positives for this class.

- Precision for class 0 (Stayed): For class 0,

76%of the instances predicted as "Stayed" are actually "Stayed". - Precision for Class 1 (Left): For class 1,

74%of the instances predicted as "Left" are actually "Left".

Recall indicates the percentage of correctly predicted positives out of the actual positives for this class.

- For class 0,

77%of the instances that are actually "Stayed" are correctly predicted by the model. - For class 1,

73%of the instances that are actually "Left" are correctly predicted by the model.

F1-Score is the harmonic mean of precision and recall, providing a single metric that balances both concerns.

- F1-Score for Class 0 (Stayed):

0.76 - F1-Score for Class 1 (Left):

0.73

Support is the number of actual occurrences of the class in the test set.

- Support for Class 0 (Stayed):

7804 - Support for Class 1 (Left):

7096

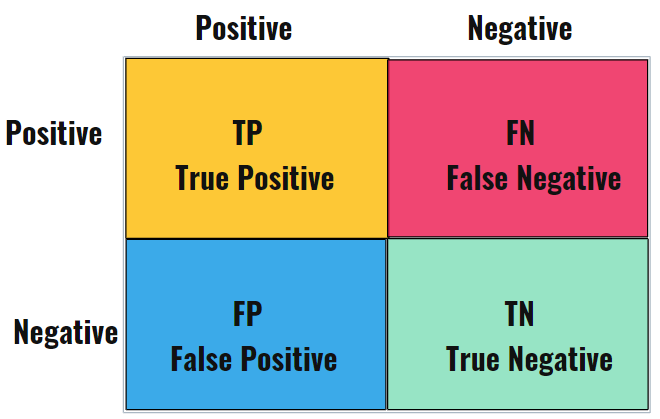

Confusion Matrix provides a summary of prediction results on a classification problem, showing the counts of actual versus predicted outcomes for each class.

- True Positives (TP) for Class 0:

6006(correctly predicted "Stayed") - True Negatives (TN) :

5155(correctly predicted "Left") - False Positive (FP):

1941predicted positive, but the actual outcome was negative (Type I error) - False Negative (FN):

1798predicted negative, but the actual outcome was positive (Type II error).

Summary

The model has a balanced precision and recall for both classes, indicating it can predict both "Stayed" and "Left" with reasonable accuracy.

The model also performs slightly better in predicting employees who stayed (Class 0) than those who left (Class 1), but the difference is not large.

Given the accuracy and the balanced precision and recall scores, this logistic regression model performs reasonably well for predicting employee attrition.