LLM and Hugging Face: Question Answering

This type of LLM answers questions based on the context you provide at the input.

Setup backend code [Node.js and Express.js]

Create hugging face API route for question-answering backend.

Used distilbert/distilbert-base-cased-distilled-squad as a model. DistilBERT is a small, fast, cheap and light Transformer model trained by distilling BERT base. This model is a fine-tune checkpoint of DistilBERT-base-cased, fine-tuned using (a second step of) knowledge distillation on SQuAD v1.1.

// create question answer API route

app.post('/api/question-answer', async(req, res) => {

const { input, context } = req.body

try {

const response = await hf.questionAnswering({

model: 'distilbert/distilbert-base-cased-distilled-squad',

inputs: {

question: input,

context: context

}

})

res.status(200).json(response)

} catch(err) {

res.status(500).json({

message: `Error in backend: ${err}`

})

}

})You can try with other models as there are hundreds of model available in hugging face. The output format for above is:

output:

{

score: 0.972388505935669,

start: 39,

end: 46,

answer: 'biryani'

}Setup frontend UI [ REACT ]

create QuestionAnswer.js component in react and copy below code.

import React, { useState } from 'react'

import axios from 'axios'

const QuestionAnswer = () => {

const [input, setInput] = useState('')

const [context, setContext] = useState('')

const [result, setResult] = useState('')

const handleQuestion = async(e) => {

e.preventDefault()

try {

const response = await axios.post('http://localhost:5000/api/question-answer', { input, context })

setResult(response.data)

} catch(err) {

setResult({message: `Error in sending API call to backend: ${err}`})

}

}

return (

<>

<form onSubmit={handleQuestion}>

<h2>Question Answering</h2>

<p>Your Context</p>

<textarea

className='qa'

rows='4'

cols='50'

value={context}

onChange={(e) => setContext(e.target.value)}

>

</textarea>

<p>Your Question</p>

<input

type='text'

value={input}

onChange={(e) => setInput(e.target.value)}

/>

<button>Generate</button>

</form>

<div className='result'>

<h3>Answer to your question</h3>

<p>{result.message && result.message }</p>

<p id='pos'>{result.answer}</p>

</div>

</>

)

}

export default QuestionAnswerRun the application

- Backend

// if nodemon installed

// in package.json

{

....,

"scripts": {

"start": "nodemon server.js",

----------,

}

}

// in commage prompt

npm start

// if nodemon not installed

node server.js- Frontend

npm start

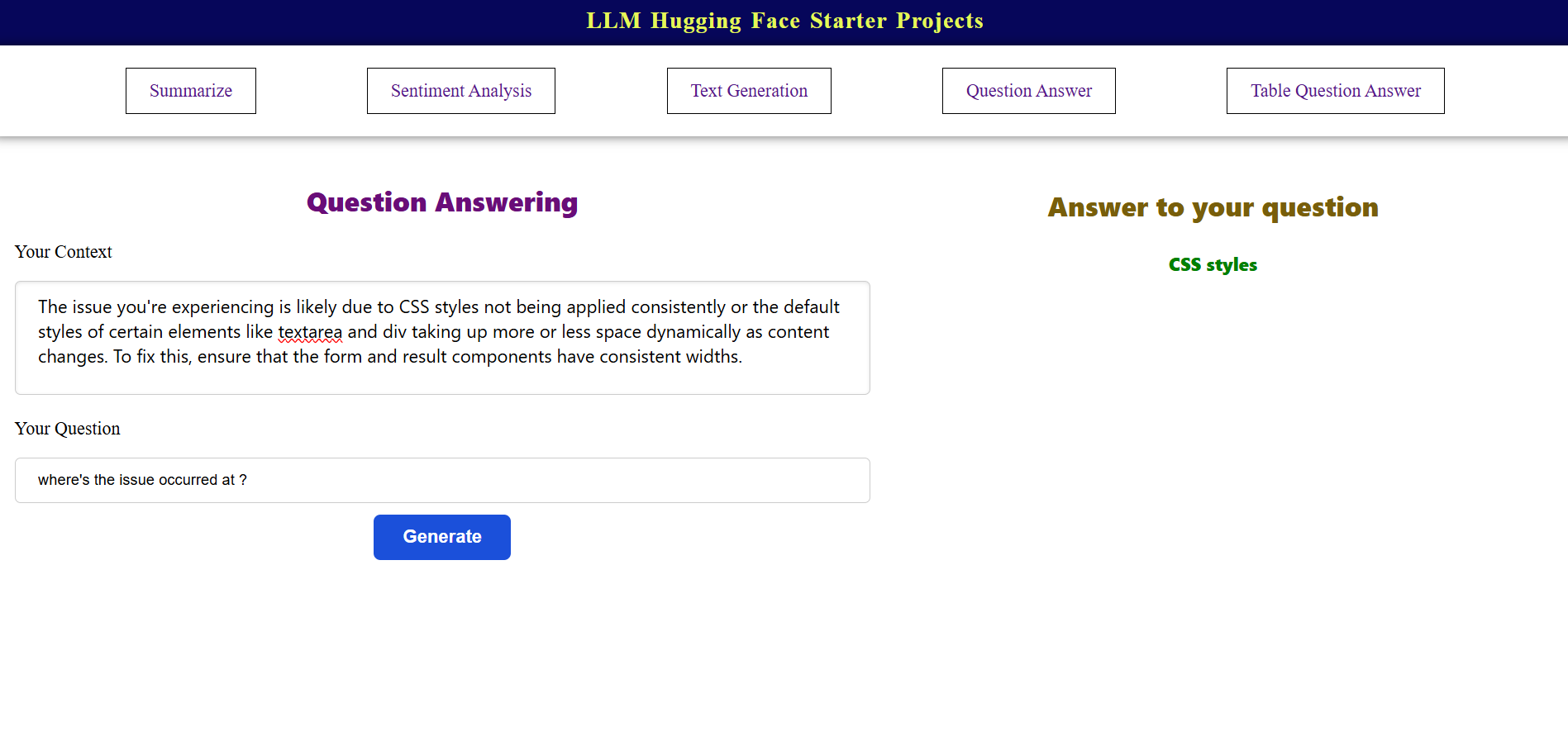

After typing the context and your question, the renders like below image and shows you the result.