Reinforcement Machine Learning

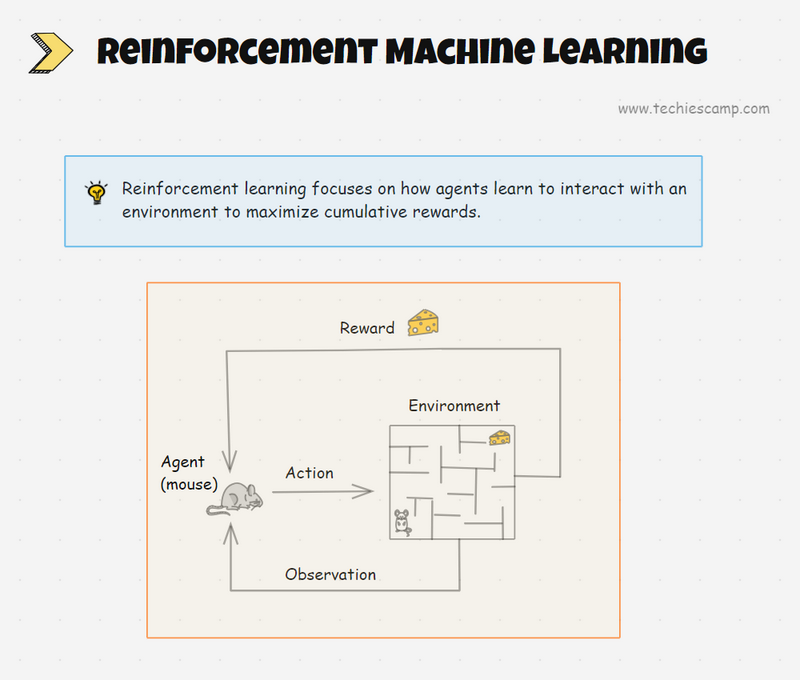

Reinforcement Learning (RL), is an agent learns to make decisions by performing actions in the environment and receiving feedback in the form of rewards or punishments.

In other words, RL focuses on maximizing a reward signal through trail and error.

Let's say that you are training an AI agent to play a game like chess. The agent explores different moves and receives positive or negative feedback based on the outcome.

Key Terms

- Agent - It is the decision-maker in RL, similar to a robot in a self-driving car.

- Environment - It is the place where the agent interacts with, such as the game board.

- State - It is the current situation or state of the environment.

- Action - The choices available to the agent.

- Reward - The feedback the agent receives, indicating the success or failure of its actions.

- Policy - The agent's strategy for choosing actions.

- Value Function - A function estimating the future reward for a given state or action.

Types of Reinforcement Algorithms

Reinforcement learning can be categorized into different types based on how the agent learns and how it interacts with the environment. The main categories are

- Model-Free / Model-Based RL

- Value-Based, Policy-Based, and Actor-Critic Based Methods

Model-Free / Model-Based Reinforcement Learning

Model-Free Reinforcement Learning

In model-free RL, the agent learns through direct interaction with the environment without building or using a model. It solely relies on the rewards received to update its strategy.

Model-free RL is common in environments where its system dynamics are unknown or too complex to model.

The classic example of model-free RL is playing video games without having access to the game's internal mechanics.

The agent learns to play by trial and error, updating its strategy based on the rewards or punishments it receives.

Model-Based Reinforcement Learning

In model-based RL, the agent learns a model of the environment, which predicts the next state and reward given a current state and action.

For example, think of a self-driving car. It might use a model to predict what will happen if it speeds up, slows down, or turns.

Instead of testing each action in real life, it uses its model to simulate and pick the best move, like avoiding an accident or reaching a destination faster.

Value-based, Policy-based, and Actor-Critic Methods

Value-Based Methods

In value-based methods, the agent learns the value of states or actions to understand which ones lead to the highest long-term reward.

One of the most common value-based algorithms is Q-Learning, where the agent learns a Q-value for each state-action pair to represent the expected reward.

Policy-Based Method

Policy-based methods don’t learn values but instead directly optimize the policy—the strategy the agent follows to choose actions.

Policy Gradient algorithm is a common approach where the policy is adjusted based on the expected reward, and are especially useful for continuous action spaces.

For example, in a game with continuous action space, the agent learns the probabilities of taking actions directly, rather than calculating values for each action.

Actor-Critic Method

Actor-Critic method combines both value-based and policy-based approaches.

The actor makes decisions by following a policy (choose actions based on learned policy), while the critic evaluates these actions by estimating the value of state -action (value function).

The critic provides feedback to the actor to improve the policy(strategy).

This hybrid approach can be more stable and sample-efficient, especially in complex environments.

For example, an autonomous car might use an actor-critic model where the critic evaluates the quality of each driving action (like steering or braking) and the actor adjusts its driving strategy based on the critic's feedback.

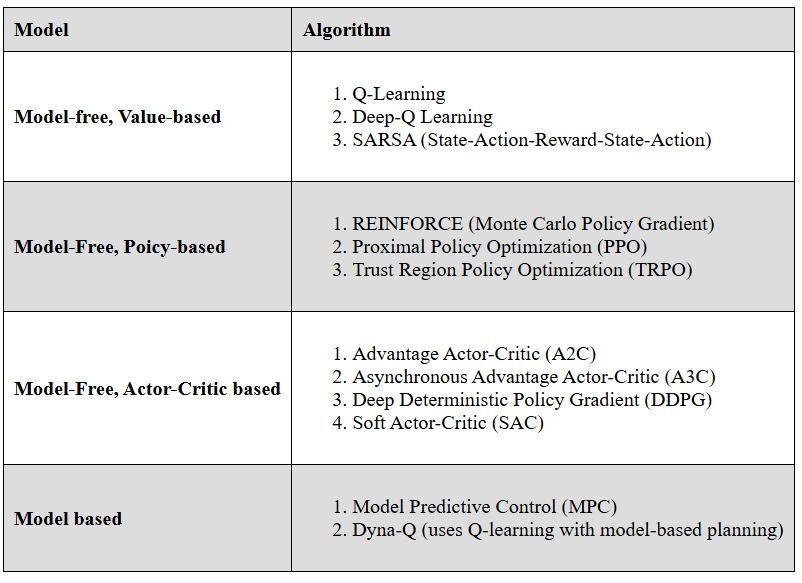

Algorithms for each category

Value-Based (Model-Free): Best for simple problems with discrete states and actions, such as grid-world games.

Policy-Based (Model-Free): Suitable for high-dimensional, continuous action spaces where calculating values for each action is inefficient, e.g., robotic control tasks.

Actor-Critic (Model-Free): Combines stability and flexibility, useful for complex tasks with both discrete and continuous actions.

Model-Based: Ideal when a model of the environment is available or easy to learn, allowing for more efficient planning and decision-making

Applications of Reinforcement Learning

- Gaming: RL has been successfully used in games like chess, Go, and complex video games (e.g., Dota 2). Agents learn optimal strategies through trial and error, often reaching superhuman levels of skill.

- Robotics: Robots learn to perform tasks like grasping objects, navigating environments, or assembling parts by continuously adjusting their actions to maximize efficiency.

- Autonomous Vehicles: Self-driving cars rely on RL to navigate roads, avoid obstacles, and make real-time decisions based on sensor data. RL helps the car adapt to dynamic conditions and optimize its driving policy.

- Recommendation Systems: RL is used to personalize recommendations by learning user preferences over time. The goal is to maximize user satisfaction and engagement rather than simply presenting popular items.

- Healthcare: In healthcare, RL helps in personalized treatment plans and dosage adjustments by learning patient responses to treatments. This personalized approach can improve outcomes and reduce adverse effects.

- Finance: RL can be applied to stock trading, where it learns trading strategies to maximize returns by adapting to market trends and predicting optimal buy/sell actions.

Thus, Reinforcement learning is a powerful approach to training intelligent agents, enabling them to make decisions through experience and feedback.

From self-driving cars to video game agents, the applications of RL are vast and growing.